LLMs

Sep 23, 2025

Reasoning Through Molecular Synthetic Pathways with Generative AI

A recurring challenge in molecular design, whether for pharmaceutical, chemical, or material applications, is creating synthesizable molecules. Synthesizability...

7 MIN READ

Sep 23, 2025

Build a Retrieval-Augmented Generation (RAG) Agent with NVIDIA Nemotron

Unlike traditional LLM-based systems that are limited by their training data, retrieval-augmented generation (RAG) improves text generation by incorporating...

17 MIN READ

Sep 18, 2025

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

As AI models grow larger and more sophisticated, inference, the process by which a model generates responses, is becoming a major challenge. Large language...

11 MIN READ

Sep 16, 2025

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

Deploying large language models (LLMs) poses a challenge in optimizing inference efficiency. In particular, cold start delays—where models take significant...

13 MIN READ

Sep 15, 2025

New Open Source Qwen3-Next Models Preview Hybrid MoE Architecture Delivering Improved Accuracy and Accelerated Parallel Processing across NVIDIA Platform

As AI models grow larger and process longer sequences of text, efficiency becomes just as important as scale. To showcase what’s next, Alibaba...

5 MIN READ

Sep 11, 2025

Modeling Attacks on AI-Powered Apps with the AI Kill Chain Framework

AI-powered applications are introducing new attack surfaces that traditional security models don’t fully capture, especially as these agentic systems gain...

12 MIN READ

Sep 11, 2025

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

After training AI models, a variety of compression techniques can be used to optimize them for deployment. The most common is post-training quantization (PTQ),...

10 MIN READ

Sep 10, 2025

Deploy Scalable AI Inference with NVIDIA NIM Operator 3.0.0

AI models, inference engine backends, and distributed inference frameworks continue to evolve in architecture, complexity, and scale. With the rapid pace of...

7 MIN READ

Sep 09, 2025

How to Connect Distributed Data Centers Into Large AI Factories with Scale-Across Networking

AI scaling is incredibly complex, and new techniques in training and inference are continually demanding more out of the data center. While data center...

6 MIN READ

Sep 09, 2025

NVIDIA Rubin CPX Accelerates Inference Performance and Efficiency for 1M+ Token Context Workloads

Inference has emerged as the new frontier of complexity in AI. Modern models are evolving into agentic systems capable of multi-step reasoning, persistent...

5 MIN READ

Sep 08, 2025

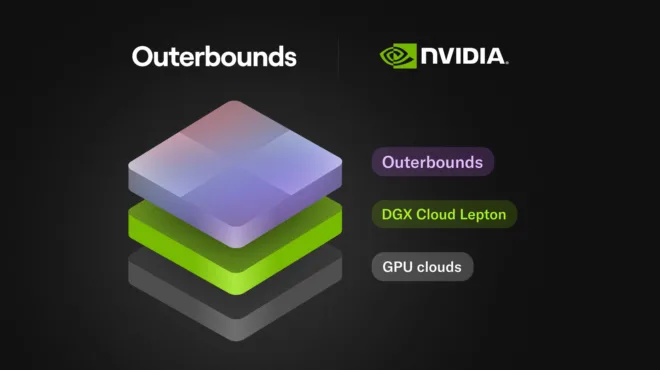

How to Build AI Systems In House with Outerbounds and DGX Cloud Lepton

It’s easy to underestimate how many moving parts a real-world, production-grade AI system involves. Whether you're building an agent that combines internal...

10 MIN READ

Sep 07, 2025

Register for the Global Webinar: How to Prepare for NVIDIA Generative AI Certification

Join a global webinar on Oct. 7 to get everything you need to succeed on the NVIDIA generative-AI certification exams, including the new professional level...

1 MIN READ

Sep 05, 2025

Accelerate Large-Scale LLM Inference and KV Cache Offload with CPU-GPU Memory Sharing

Large Language Models (LLMs) are at the forefront of AI innovation, but their massive size can complicate inference efficiency. Models such as Llama 3 70B and...

7 MIN READ

Sep 03, 2025

Accelerate Autonomous Vehicle Development with the NVIDIA DRIVE AGX Thor Developer Kit

Autonomous vehicle (AV) technology is rapidly evolving, fueled by ever-larger and more complex AI models deployed at the edge. Modern vehicles now require not...

8 MIN READ

Aug 27, 2025

How to Scale Your LangGraph Agents in Production From A Single User to 1,000 Coworkers

You’ve built a powerful AI agent and are ready to share it with your colleagues, but have one big fear: Will the agent work if 10, 100, or even 1,000...

10 MIN READ

Aug 25, 2025

NVFP4 Trains with Precision of 16-Bit and Speed and Efficiency of 4-Bit

In recent years, AI workloads have grown exponentially—not only in the deployment of large language models (LLMs) but also in the demand to process ever more...

9 MIN READ